Editorial Disclaimer

This content is published for general information and editorial purposes only. It does not constitute financial, investment, or legal advice, nor should it be relied upon as such. Any mention of companies, platforms, or services does not imply endorsement or recommendation. We are not affiliated with, nor do we accept responsibility for, any third-party entities referenced. Financial markets and company circumstances can change rapidly. Readers should perform their own independent research and seek professional advice before making any financial or investment decisions.

If you’re in the business of video creation, localisation, or global distribution, 2026 promises to be the year when AI dubbing and translation move from pilot projects into real workflows.

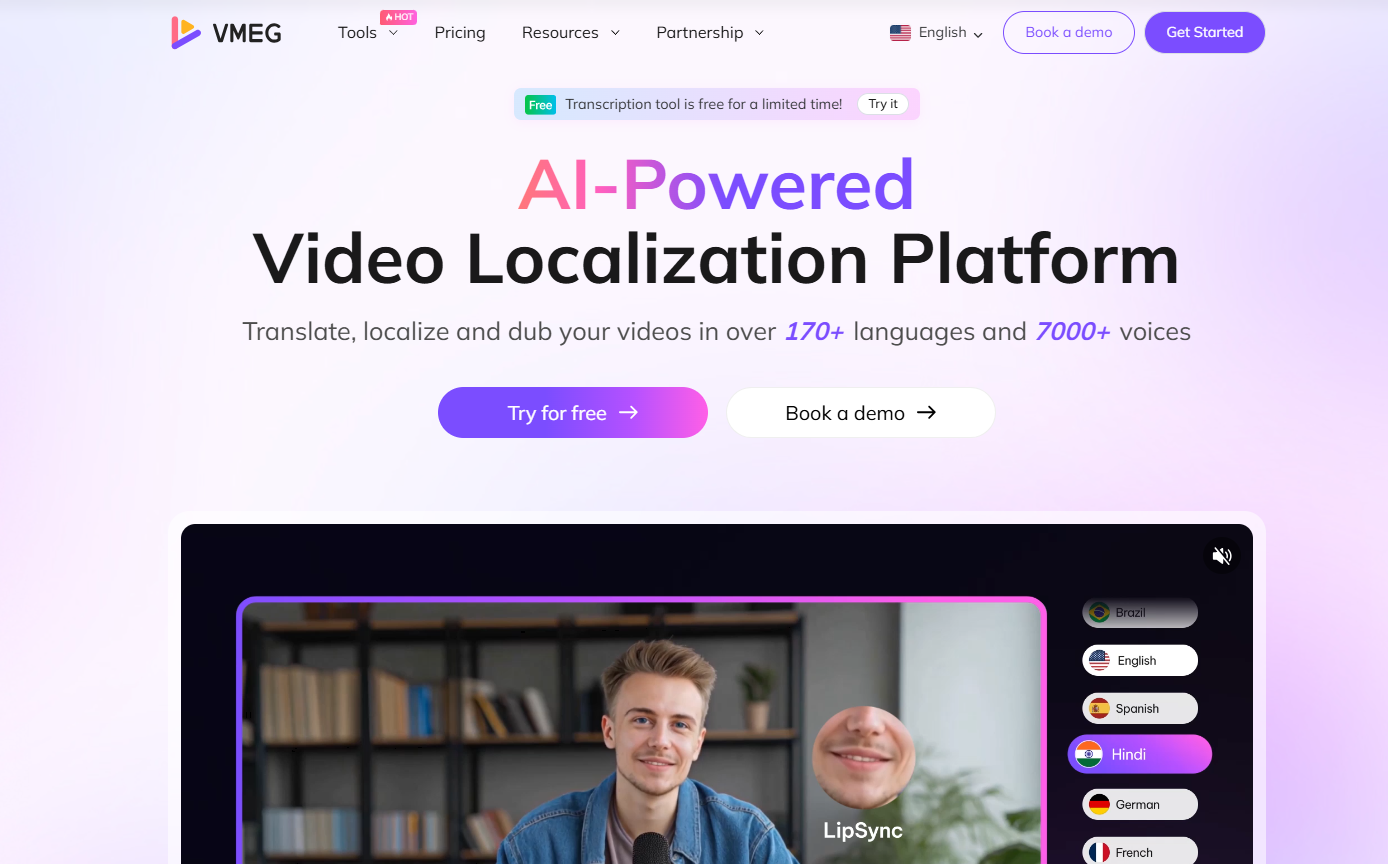

In this article, I’m going to walk you through why that’s happening, what 6 major trends you should watch, and how you can prepare your workflow for success. We’ll also briefly highlight how an AI video dubbing tool like VMEG AI fits into this ecosystem (without getting too promotional).

Let's start by defining AI dubbing and video translation: it is the process of using artificial intelligence models for speech recognition, translation, synthesis, and, occasionally, mouth-motion alignment to create a new version of a video with the audio in a different language (with optional lip-sync and subtitles).

Slator's 2025 Market Report projects that the language services market will reach $100 billion USD by 2026, with AI localisation contributing more than 40% of this development. Multilingual content has already become too common to surprise people on platforms like YouTube and Spotify. Case in point is YouTube's multi-language audio feature, which allows a single video to have music in dozens of languages. Spotify's Voice Translation project, on the other hand, duplicates a creator's actual voice in many languages.

Here’s why 2026 stands out:

In short: when demand, distribution, and tech align, things shift. 2026 is shaping up to be that alignment year.

Previously, creators needed separate channels or separate videos per language. Now, YouTube’s “multi-language audio” feature allows multiple dubbed tracks on the same video. In fact, YouTube’s own blog states that creators saw on average 25%+ of watch time come from views in other-language tracks.

Implication: If you’re distributing globally, you no longer need to duplicate your video asset per language; you can reuse the core video and attach dubbed audio tracks. That simplifies operations and boosts reach with one source.

Big platforms and media firms are incorporating AI-dubbing into their production processes rather than only testing.

This implies that you will witness voice cloning, automated lip-sync, longer-form films, hybrid procedures (AI + human QC), and adaptable voice assets for the long tail of languages.

Implication: Even if you don’t go full-AI, you need to design a workflow that includes review, quality control, and iterative improvement—not just a “dump content and pray” approach.

Advances like Whisper Large-V3 improve zero-shot speech recognition, while new real-time lip-sync research minimises the “out-of-sync mouth” problem. Voices in panel videos, podcasts, and documentaries can now be accurately distinguished using multi-speaker diarization. Vendors should be audited for each step, ASR, MT, TTS, and lip-sync, separately rather than collectively.

With synthetic audio/video becoming easy to produce, regulation is tightening:

Brands and global creators must build consent, rights-clearance, labelled synthetic-media pipelines. If you’re dubbing voices or cloning voice-assets, make sure you have related talent contracts, rights terms, and disclosure strategies.

Because of AI advances and scalable workflows, the cost per language is dropping. Meanwhile, platforms reward better engagement—dubbed videos can drive higher watch-time and retention in non-native languages (as noted above).

Implication: Instead of asking “Can we afford to localise?” ask “Which languages do we localise first?” and “What increment of reach or revenue justifies each new language?” Use ROI thinking: incremental cost vs incremental non-primary-language watch-time, subs, leads.

For creators and brands, the previous model (“English → then a separate Spanish channel → separate Portuguese channel”) is shifting. With multi-language audio tracks and universal distribution, you can keep a single channel or video domain and serve markets via language tracks.

When you shop or evaluate tools in 2026, here are what matter (not in order of importance, but all worth checking):

Here’s a ready-to-go operational roadmap for creators and brands to make AI dubbing and video translation in 2026:

Use analytics to find the top 10 videos by watch time outside your home language. Focus on the markets where your content already has organic pull.

Base language choices on subtitle engagement, audience geography, and projected regional growth potential. Prioritise quality over quantity.

Create glossaries of brand terminology, pronunciation rules, and tone references. A well-defined style guide keeps dubs consistent and culturally appropriate.

Like Amazon’s hybrid model (AP News 2025), let AI handle the first pass for speed and efficiency while human reviewers ensure emotional accuracy and cultural nuance.

In the context of executing these steps, one example platform is VMEG AI. As AI dubbing tools mature, creators need solutions that balance scale, accuracy, and governance.

VMEG AI is an emerging platform illustrating these principles in practice:

Store voice consent forms, label synthetic outputs, and retain audit records to comply with the EU AI Act and emerging voice-rights policies.

Track retention rates, click-throughs, and audience expansion. Upgrade auto-dubs that perform well to premium human-reviewed versions.

AI video translation isn’t about automation alone, it’s about access. In 2026, the capacity to communicate honestly with viewers in their native tongue will distinguish brands and creators that go globally from those that remain local.

Begin with a single flagship video, two languages, and measurable results. Then build from there—fearlessly.

2026 is seen as a pivotal year because the demand for global content, the technology for AI dubbing, the distribution platforms like YouTube, and the legal frameworks are all aligning. This convergence is moving AI translation from an experimental phase into a standard part of video production workflows.

This model refers to the new standard where you can maintain a single YouTube channel or video asset and serve multiple global audiences by attaching different language audio tracks. It replaces the old method of creating separate channels for each language, which simplifies your content management and broadens your reach.

Yes, absolutely. While AI can handle the initial translation and dubbing process with increasing accuracy, human quality control is crucial. A human reviewer ensures emotional nuance, cultural appropriateness, and brand consistency, which AI might miss. A hybrid approach is often the most effective.

You should prioritise platforms that offer high accuracy in speech recognition and translation, precise lip-sync capabilities, and a wide range of languages and authentic-sounding voices. Also, ensure the tool complies with regulations like the EU AI Act and integrates smoothly with your existing video editing and distribution tools.

You can begin by using your video analytics to find a top-performing video that already gets views from a specific non-native language audience. Then, select just one or two priority languages to test. This data-driven approach allows you to start small, measure the return on investment, and scale your efforts effectively.